How GPU Memory Virtualization Is Breaking AI's Biggest Bottleneck

Published Dec 6, 2025

In the last two weeks GPU memory virtualization and disaggregation moved from infra curiosity to a rapid, production trend—because models and simulations increasingly need tens to hundreds of gigabytes of VRAM. Read this and you'll know what's changing, why it matters to your AI, quant, or biotech workloads, and what to do next. The core idea: software‐defined pooled VRAM—virtualized memory, disaggregated pools, and communication‐optimized tensor parallelism—makes many smaller GPUs look like one big memory space. That means you can train larger or more specialist models, host denser agentic workloads, and run bigger Monte Carlo or molecular simulations without buying a new fleet. Tradeoffs: paging latency, new failure modes, and security/isolation risks. Immediate steps: profile memory footprints, adopt GPU‐aware orchestration, refactor for sharding/checkpointing, and plan hybrid hardware generations.

The Shift to Domain‐Specific Foundation Models Every Tech Leader Must Know

Published Dec 6, 2025

If your teams still bet on generic LLMs, you're facing diminishing returns — over the last two weeks the industry has accelerated toward enterprise‐grade, domain‐specific foundation models. You’ll get why this matters, what these stacks look like, and what to watch next. Three forces drove the shift: generic models stumble on niche terminology and protocol rules; high‐quality domain datasets have matured over the last 2–3 years; and tooling for safe adaptation (secure connectors, parameter‐efficient tuning like LoRA/QLoRA, retrieval, and domain evals) is now enterprise ready. Practically, stacks layer a base foundation model, domain pretraining/adaptation, retrieval/tools (backtests, lab instruments, CI), and guardrails. Impact: better correctness, calibrated outputs, and tighter integration into trading, biotech, and engineering workflows — but watch data bias, IP leakage, and regulatory guardrails. Immediate signs to monitor: vendor domain‐tuning blueprints, open‐weight domain models, and platform tooling that treats adaptation and eval as first‐class.

Quantum Leap: Fault-Tolerant Hardware Moves from Lab to Reality

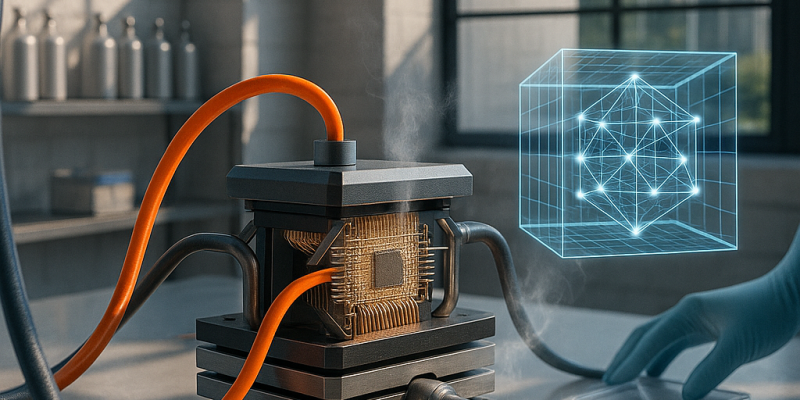

Published Nov 22, 2025

Worried quantum is shifting from lab hype to real business risk and opportunity? Read this and you’ll know what changed, when, who’s involved, key numbers, and the near‐term outlook. In November 2025 IQM unveiled Halocene (Nov 13) — an on‐prem QEC platform with 150 physical qubits and a 99.7% two‐qubit fidelity target, slated commercial by end‐2026; IBM revealed Nighthawk (Nov 2025) with 120 qubits, 218 tunable couplers, a move to 300 mm wafers to double R&D speed and 10× chip complexity, plus qLDPC decoding in <480 ns; Quantinuum launched Helios (Nov 5–6) — 98 fully connected qubits, 99.9975% single‐qubit fidelity, 48 logical qubits at 2:1 encoding and NVIDIA GB200 integration for 2026 deployment in Singapore; Microsoft committed DKK 1bn to topological qubit manufacturing in Lyngby. Impact: faster path to fault‐tolerant quantum for AI, finance, biotech, security and infrastructure. Near term: commercial systems and regional deployments in 2026; watch gate fidelity, logical‐qubit ratios and sub‐microsecond decoding.

Google’s Antigravity Turns Gemini 3 Pro into an Agent-First Coding IDE

Published Nov 18, 2025

Worried about opaque AI agents silently breaking builds? Here’s what happened, why it matters, and what to do next: on 2025-11-18 Google unveiled Antigravity (public preview), an agent-first coding environment layered on Gemini 3 Pro (Windows/macOS/Linux) that also supports Claude Sonnet 4.5 and GPT-OSS; it embeds agents in IDEs/terminals with Editor and Manager views, persistent memory, human feedback, and verifiable Artifacts (task lists, plans, screenshots, browser recordings). Gemini 3 Pro previews in November 2025 showed 200,000- and 1,000,000-token context windows, enabling long-form and multimodal workflows. This shifts developer productivity, trust, and platform architecture—and raises risks (overreliance, complexity, cost, privacy). Immediate actions: invest in prompt design, agent orchestration, observability/artifact storage, and monitor regional availability, benchmark comparisons, and pricing.

Google Unveils Gemini 3.0 Pro: 1T-Parameter, Multimodal, 1M-Token Context

Published Nov 18, 2025

Worried your AI can’t handle whole codebases, videos, or complex multi-step reasoning? Here’s what to expect: Google announced Gemini 3.0 Pro / Deep Think, a >1 trillion-parameter Mixture-of-Experts model (about 15–20B experts active per query) with native text/image/audio/video inputs, two context tiers (200,000 and 1,000,000 tokens), and stronger agentic tool use. Benchmarks in the article show GPQA Diamond 91.9%, Humanity’s Last Exam 37.5% without tools and 45.8% with tools, and ScreenSpot-Pro 72.7%. Preview access opened to select enterprise users via API in Nov‐2025, with broader release expected Dec‐2025 and general availability early 2026. Why it matters: you can build longer, multimodal, reasoning-heavy apps, but plan for higher compute/latency, privacy risks from audio/video, and robustness testing. Immediate watch items: independent benchmark validation, tooling integration, pricing for 200k vs 1M tokens, and modality-specific safety controls.

Retrieval Is the New AI Foundation: Hybrid RAG and Trove Lead

Published Nov 18, 2025

Worried about sending sensitive documents to the cloud? Two research releases show you can get competitive accuracy while keeping data local. On Nov 3, 2025 Trove shipped as an open-source retrieval toolkit that cuts memory use 2.6× and adds live filtering, dataset transforms, hard-negative mining, and multi-node runs. On Nov 13, 2025 a local hybrid RAG system combined semantic embeddings and keyword search to answer legal, scientific, and conversational queries entirely on device. Why it matters: privacy, latency, and cost trade-offs now favor hybrid and on‐device retrieval for regulated customers and production deployments. Immediate moves: integrate hybrid retrieval early, vet vector DBs for privacy/latency/hybrid support, use Trove-style evaluation and hard negatives, and build internal pipelines for domain tests. Outlook: ~80% confidence RAG becomes central to AI stacks in the next 12 months.

Edge AI Revolution: 10-bit Chips, TFLite FIQ, Wasm Runtimes

Published Nov 16, 2025

Worried your mobile AI is slow, costly, or leaking data? Recent product and hardware moves show a fast shift to on-device models—and here’s what you need. On 2025-11-10 TensorFlow Lite added Full Integer Quantization for masked language models, trimming model size ~75% and cutting latency 2–4× on mobile CPUs. Apple chips (reported 2025-11-08) now support 10‐bit weights for better mixed-precision accuracy. Wasm advances (wasmCloud’s 2025-11-05 wash-runtime and AoT Wasm results) deliver binaries up to 30× smaller and cold-starts ~16% faster. That means lower cloud costs, better privacy, and faster UX for AR, voice, and vision apps, but you must manage accuracy, hardware variability, and tooling gaps. Immediate moves: invest in quantization-aware pipelines, maintain compressed/full fallbacks, test on target hardware, and watch public quant benchmarks and new accelerator announcements; adoption looks likely (estimated 75–85% confidence).

Agentic AI Workflows: Enterprise-Grade Autonomy, Observability, and Security

Published Nov 16, 2025

Google Cloud updated Vertex AI Agent Builder in early November 2025 with features—self‐heal plugin, Go support, single‐command deployment CLI, dashboards for token/latency/error monitoring, a testing playground and traces tab, plus security features like Model Armor and a Security Command Center—and Vertex AI Agent Engine runtime pricing begins in multiple regions on November 6, 2025 (Singapore, Melbourne, London, Frankfurt, Netherlands). These moves accelerate enterprise adoption of agentic AI workflows by improving autonomy, interoperability, observability and security while forcing regional cost planning. Academic results reinforce gains: Sherlock (2025‐11‐01) improved accuracy ~18.3%, cut cost ~26% and execution time up to 48.7%; Murakkab reported up to 4.3× lower cost, 3.7× less energy and 2.8× less GPU use. Immediate priorities: monitor self‐heal adoption and regional pricing, invest in observability, verification and embedded security; outlook confidence ~80–90%.

OpenAI Turbo & Embeddings: Lower Cost, Better Multilingual Performance

Published Nov 16, 2025

Over the past 14 days OpenAI rolled out new API updates: text-embedding-3-small and text-embedding-3-large (small is 5× cheaper than prior generation and improved MIRACL from 31.4% to 44.0%; large scores 54.9%), a GPT-4 Turbo preview (gpt-4-0125-preview) fixing non‐English UTF‐8 bugs and improving code completion, an upgraded GPT-3.5 Turbo (gpt-3.5-turbo-0125) with better format adherence and encoding fixes plus input pricing down 50% and output pricing down 25%, and a consolidated moderation model (text-moderation-007). These changes lower retrieval and inference costs, improve multilingual and long-context handling for RAG and global products, and tighten moderation pipelines; OpenAI reports 70% of GPT-4 API requests have moved to GPT-4 Turbo. Near term: expect GA rollout of GPT-4 Turbo with vision in coming months and close monitoring of benchmarks, adoption, and embedding dimension trade‐offs.

EU AI Act Triggers Global Compliance Overhaul for General‐Purpose AI

Published Nov 16, 2025

As of 2 August 2025 the EU AI Act’s obligations for providers of general-purpose AI (GPAI) models entered into application across the EU, imposing rules on transparency, copyright and safety/security for models placed on the market, with models already on market required to comply by 2 August 2027; systemic‐risk models—e.g., those above compute thresholds such as >10^23 FLOPs—face additional notification and elevated safety/security measures. A July 2025 template now mandates public training‐data summaries, a voluntary Code of Practice was finalized on 10 July 2025 to help demonstrate compliance, and enforcement including fines up to 7% of global turnover will start 2 August 2026. Impact: product release strategies, contracts and deployments must align to avoid delisting or penalties. Immediate actions: classify models under GPAI criteria, run documentation and safety gap analyses, and decide on CoP signatory status.