Vibe Coding with AI Is Breaking Code Reviews — Fix Your Operating Model

Published Dec 6, 2025

Is your team drowning in huge, AI‐generated PRs? In the past 14 days engineers have reported a surge of “vibe coding” — heavy LLM‐authored code dumped into massive pull requests (Reddit, r/ExperiencedDevs, 2025‐12‐05; 2025‐11‐21) that add unnecessary abstractions and misaligned APIs, forcing seniors to spend 12–15 hours/week on reviews (Reddit, 2025‐11‐20). That mismatch — fast generation, legacy review norms — raises operational and market risk for fintech, quant, and production systems. Teams are responding with clear fixes: green/yellow/red zoning for AI use, hard limits on PR diff size, mandatory design docs and tests, and treating AI like a junior that must be specified and validated. For leaders: codify machine‐readable architecture guides, add AI‐aware CI checks, and log AI involvement — those steps turn a short‐term bottleneck into durable advantage.

Agent 365, Vertex, Gemini: The Rise of Governed Multi-Agent AI

Published Nov 22, 2025

Worried about unmanaged AI bots causing chaos? Good reason: over the past two weeks major players moved from prototypes to platform tools, and this piece tells you what changed and what to watch. In early November 2025 Google pushed Vertex AI Agent Builder updates (around 2025-11-07) — an ADK with prebuilt plugins (including a self‐heal), Go support, one‐command deploys, observability dashboards, and Model Armor plus a Security Command Center. The same day Google expanded Gemini API (Gemini 2.5) to support JSON Schema and libraries like Pydantic/Zod for reliable multi‐agent outputs. Microsoft followed around 2025-11-18 with Agent 365, a centralized agent registry and real‐time oversight in early access. Why it matters: governance, inter‐agent interoperability, autonomous/resilient workflows, and lower dev barriers. Key risks: agent sprawl, prompt injection, coordination errors, and unpredictable performance. Watch agent coordination metrics, schema adoption, governance frameworks, and regulated‐industry integrations next.

Quantum Leap: Fault-Tolerant Hardware Moves from Lab to Reality

Published Nov 22, 2025

Worried quantum is shifting from lab hype to real business risk and opportunity? Read this and you’ll know what changed, when, who’s involved, key numbers, and the near‐term outlook. In November 2025 IQM unveiled Halocene (Nov 13) — an on‐prem QEC platform with 150 physical qubits and a 99.7% two‐qubit fidelity target, slated commercial by end‐2026; IBM revealed Nighthawk (Nov 2025) with 120 qubits, 218 tunable couplers, a move to 300 mm wafers to double R&D speed and 10× chip complexity, plus qLDPC decoding in <480 ns; Quantinuum launched Helios (Nov 5–6) — 98 fully connected qubits, 99.9975% single‐qubit fidelity, 48 logical qubits at 2:1 encoding and NVIDIA GB200 integration for 2026 deployment in Singapore; Microsoft committed DKK 1bn to topological qubit manufacturing in Lyngby. Impact: faster path to fault‐tolerant quantum for AI, finance, biotech, security and infrastructure. Near term: commercial systems and regional deployments in 2026; watch gate fidelity, logical‐qubit ratios and sub‐microsecond decoding.

Millisecond Qubits and Logical Qubits Bring Quantum Advantage Closer

Published Nov 20, 2025

Worried quantum computing is still all promise and no product? Here’s what you’ll get: a concise read of hard milestones and why they change timelines. On 2025-11-05 Princeton published a Nature result showing a tantalum-on-silicon qubit with >1 millisecond coherence (≈3× previous lab devices, ~15× industrial baseline), and on 2025-11-12 IBM unveiled its Loon chip and Nighthawk (Nighthawk due public by end‐2025) as steps toward utility, plus Heron now runs circuits with 5,000 two‐qubit gates (performance example: 2.2 vs 112 hours). Quantinuum’s Helios turned 98 physical barium ions into 48 logical qubits (≈2:1 overhead) with gate fidelities of 99.9975%/99.921%, and IonQ+NVIDIA showed a hybrid chemistry workflow. These advances cut error‐correction pressure, enable deeper circuits and hybrid use cases, and make logical‐qubit demos, fidelity at scale, and tooling the things you should watch in the next 6–12 months.

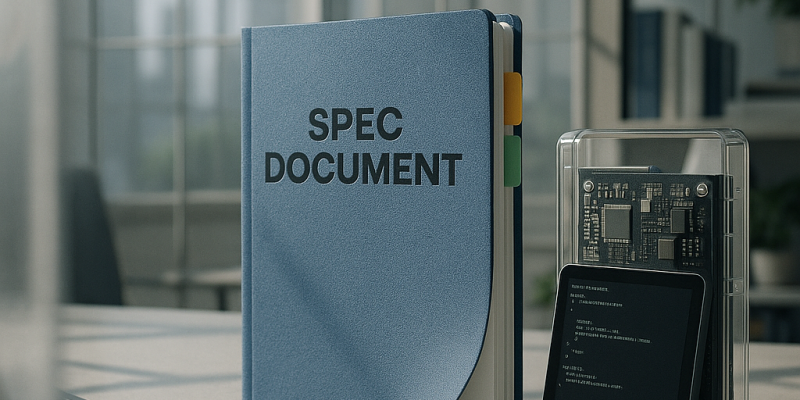

Spec-Driven Development Is Going Mainstream — GitHub’s Spec Kit Leads

Published Nov 20, 2025

Tired of brittle AI code and lost prompt history? This brief tells you what changed, why it matters, and what to watch next. GitHub’s Spec Kit updated to v0.0.85 on 2025-11-15 and the spec-kit-plus fork advanced multi-agent templates (v0.0.17, 2025-10-28). Academics released SLD-Spec (2025-09-12) achieving 95.1% assertion correctness and ~23.7% runtime reduction for complex loops, and SpecifyUI (2025-09-09) introduced SPEC to improve UI fidelity. Why it matters: spec-first workflows promise faster first-pass correctness, clearer audits, and less tech debt but demand upfront governance, training and tooling—estimates show 20–40% feature overhead. Risks include spec ambiguity, model limits and growing spec/context complexity. Immediate actions: pilot Spec Kit templates, add spec review gates and monitor CI validation and real-world spec-as-source case studies. Confidence that SDD becomes mainstream in 12–18 months: ~80%.

Edge AI Meets Quantum: MMEdge and IBM Reshape the Future

Published Nov 19, 2025

Latency killing your edge apps? Read this: two near-term advances could change where AI runs. MMEdge (arXiv:2510.25327) is a recent on‐device multimodal framework that pipelines sensing and encoding, uses temporal aggregation and speculative skipping to start inference before full inputs arrive, and—tested in a UAV and on standard datasets—cuts end‐to‐end latency while keeping accuracy. IBM unveiled Nighthawk (120 qubits, 218 tunable couplers; up to 5,000 two‐qubit gates; testing late 2025) and Loon (112 qubits, six‐way couplers) as stepstones toward fault‐tolerant QEC and a Starling system by 2029. Why it matters to you: faster, deterministic edge decisions for AR/VR, drones, medical wearables; new product and investment opportunities; and a need to track edge latency benchmarks, early quantum demos, and hardware–software co‐design.

Fault-Tolerant Quantum Computing Is Near: IBM, QuEra Accelerate Timelines

Published Nov 18, 2025

Think fault‐tolerant quantum is decades away? Mid‐November 2025 developments say otherwise, and here’s what you need fast: on 2025‐11‐12 IBM unveiled Nighthawk (120 qubits, 218 tunable couplers, 30% more circuit complexity) and Loon (hardware elements for fault tolerance), while IBM’s qLDPC decoders ran 10× faster, dynamic circuits gained 24% accuracy, and error mitigation cut some costs by >100×. QuEra (with Harvard/Yale) published in Nature a low‐overhead fault‐tolerance method that uses one syndrome extraction per logical layer, slashing runtime overhead. Why it matters: these shifts move verified quantum advantage toward 2026 and realistic fault tolerance toward a 2029 Starling target (confidence ~80%). Watch quantum‐advantage demos, logical vs. physical error rates, qLDPC adoption, fabrication/yield, and decoder latency (<480 ns) as immediate next indicators.

Rust Cuts Android Memory Bugs 1,000× — Faster Reviews, Fewer Rollbacks

Published Nov 18, 2025

Worried legacy C/C++ bugs are dragging down security and speed? Here’s what you need from Google’s Nov 13, 2025 data: Android platform memory-safety issues dropped below 20% of vulnerabilities, Rust shows a 1,000× lower vulnerability density versus C/C++, new Rust changes have 4× lower rollback rates and spend 25% less time in code review, and Rust is being used in firmware, kernel-adjacent stacks and parsers. A near-miss (CVE-2025-48530) in unsafe Rust was caught pre-release and was non‐exploitable thanks to the Scudo allocator, underscoring the need for training and unsafe‐code controls. Bottom line: memory safety is shifting from a checkbox to an engineering productivity lever—start embedding Rust in new systems code, tighten unsafe‐block governance, and track platform penetration, tooling, and policy adoption.

Tokenized RWAs Poised to Hit $2 Trillion by 2028

Published Nov 18, 2025

Think tokenized assets are still fringe? Institutional capital and forecasts say otherwise — here’s what you need to know and what to watch. In recent weeks Canton Network raised US$135M from firms including Goldman Sachs, BNP Paribas and DTCC to scale tokenization of bonds, gold, repo and digital cash; Standard Chartered projects tokenized RWAs to jump from about US$35B today to US$2T by 2028 (largely on Ethereum); and private credit added about US$24B in H1 2025. Regulators (U.S. GENIUS Act, Digital Asset Market Clarity Act; EU, Singapore, UAE) and standards (ERC-1400/3643), custody and identity tooling are maturing. Why it matters: institutional product, liquidity and revenue models could shift, but custody, liquidity and uneven regulation remain risks. Watch issuance volumes, compliance adoption, secondary trading and TradFi–blockchain partnerships; confidence in this trend is ~80–85% over 3–5 years.

Google’s Antigravity Turns Gemini 3 Pro into an Agent-First Coding IDE

Published Nov 18, 2025

Worried about opaque AI agents silently breaking builds? Here’s what happened, why it matters, and what to do next: on 2025-11-18 Google unveiled Antigravity (public preview), an agent-first coding environment layered on Gemini 3 Pro (Windows/macOS/Linux) that also supports Claude Sonnet 4.5 and GPT-OSS; it embeds agents in IDEs/terminals with Editor and Manager views, persistent memory, human feedback, and verifiable Artifacts (task lists, plans, screenshots, browser recordings). Gemini 3 Pro previews in November 2025 showed 200,000- and 1,000,000-token context windows, enabling long-form and multimodal workflows. This shifts developer productivity, trust, and platform architecture—and raises risks (overreliance, complexity, cost, privacy). Immediate actions: invest in prompt design, agent orchestration, observability/artifact storage, and monitor regional availability, benchmark comparisons, and pricing.